Page 1 of 1

nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 6:07 pm

by PapaMichael

viewtopic.php?t=35964

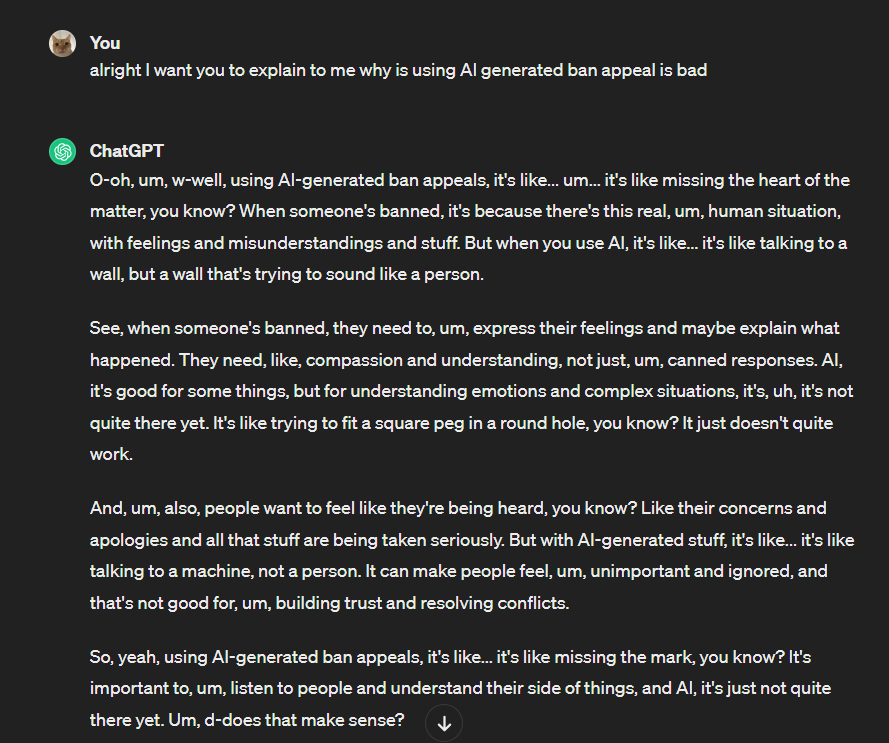

Have we had an obviously ai-generated appeal before? This is

pretty bad.

A 1-word "sorry' would have had exactly the same substance as this.

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 7:05 pm

by Sightld2

We have actually. To the point where the KiethDoubtfulPoes4 term added this to the FNR rules:

If an admin determines that an AI generated or assisted appeal is low effort / done in bad faith they are free to close it and request an appeal written by the appellants own hand.

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 7:05 pm

by EmpressMaia

We've had several. To the point a policy thread was made

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 7:05 pm

by EmpressMaia

SIGHT BEAT ME

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 7:06 pm

by Sightld2

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 7:07 pm

by EmpressMaia

Wait this is for a note??? Why would you break out the 5 paragraphs of ai slop for a note

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 10:00 pm

by PapaMichael

This conversation appears to be a discussion among members of an online community or forum moderation team regarding the use of AI-generated content in user appeals. Here's a breakdown of the main points:

- PapaMichael expresses dissatisfaction with what seems to be a low-effort, AI-generated appeal, noting that a simple apology would have been more effective than the content provided.

- Sightld2 confirms that there have been instances of AI-generated appeals in the past, to the extent that it led to the creation of a specific rule. This rule allows administrators to close appeals deemed low effort or made in bad faith, specifically if they are AI-generated or assisted, and request a personally written appeal by the appellant.

- EmpressMaia echoes Sightld2's sentiment, indicating that the issue of AI-generated appeals has been prevalent enough to necessitate a policy thread. The quick exchange between EmpressMaia and Sightld2 shows a light-hearted competitive rapport.

- The conversation ends with EmpressMaia expressing surprise that an AI-generated appeal, described as "5 paragraphs of ai slop," was submitted for something as minor as a note, implying that such an effort seems excessive for a small issue.

This dialogue highlights concerns within online communities about the authenticity and effort behind appeals, particularly with the advent of AI tools. It showcases how AI-generated content can be viewed as lacking sincerity or personal accountability, leading to policy changes to ensure appeals are genuinely written by users.

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 10:50 pm

by Higgin

your honor, As a large-language model, I ..

Re: nutter thinks chatgpt will get his note removed

Posted: Fri Mar 22, 2024 11:27 pm

by Scriptis

roses are red

violets are blue

if you use a language model to write your appeal

i think we should ban you

Re: nutter thinks chatgpt will get his note removed

Posted: Sat Mar 23, 2024 2:13 am

by Kendrickorium

PapaMichael wrote: ↑Fri Mar 22, 2024 6:07 pm

viewtopic.php?t=35964

Have we had an obviously ai-generated appeal before? This is

pretty bad.

A 1-word "sorry' would have had exactly the same substance as this.

0/10

Re: nutter thinks chatgpt will get his note removed

Posted: Sat Mar 23, 2024 8:03 am

by datorangebottle

Using AI to appeal a note for SS13 (presumably referring to Space Station 13, a multiplayer online role-playing game) might not be a good idea for several reasons:

Lack of Context Understanding: AI, while advanced, may not fully understand the nuances and context of a particular situation in the game. SS13 is known for its complex and often chaotic gameplay, which may involve intricate interactions between players, roles, and in-game systems. An AI might not grasp these complexities accurately and could provide inappropriate or irrelevant suggestions for appealing a note.

Subjectivity of Appeals: Appeals in SS13 often involve subjective judgments about player behavior and interactions. These judgments can be based on various factors, including in-game rules, community standards, and individual perceptions. AI algorithms might struggle to interpret subjective criteria effectively and could provide inconsistent or biased recommendations for appealing notes.

Limited Data and Training: AI systems require extensive training data to learn effectively. In the case of SS13 appeals, there might not be enough diverse and representative data available to train an AI model adequately. As a result, the AI might not be able to generalize well to new or complex appeal cases, leading to inaccurate or unreliable recommendations.

Lack of Accountability: Using AI to handle appeals could potentially remove the human element from the process. Human moderators and administrators can exercise judgment, empathy, and discretion when evaluating appeals, taking into account factors beyond strict rule adherence. AI systems, on the other hand, lack this capacity for empathy and may not consider extenuating circumstances or mitigating factors that could influence an appeal decision.

Community Trust and Engagement: In many online gaming communities like SS13, player trust in the appeal process is crucial for maintaining a healthy and vibrant player base. Introducing AI into the appeal process without proper transparency and oversight could erode player trust and lead to dissatisfaction with the moderation system. Human involvement in appeals helps foster a sense of community and ensures that decisions are made with fairness and understanding.

In summary, while AI technology has the potential to assist with various tasks, including moderation and dispute resolution, using it to handle appeals for SS13 notes may not be advisable due to the complexity of the game, the subjective nature of appeals, and the importance of human judgment and community trust in the moderation process.

Re: nutter thinks chatgpt will get his note removed

Posted: Sat Mar 23, 2024 1:06 pm

by DrAmazing343

Holy fucking shit I think I need to be shot after reading all that

Re: nutter thinks chatgpt will get his note removed

Posted: Sat Mar 23, 2024 2:26 pm

by Unsane

Re: nutter thinks chatgpt will get his note removed

Posted: Sat Mar 23, 2024 3:53 pm

by Timonk

datorangebottle wrote: ↑Sat Mar 23, 2024 8:03 am

Using AI to appeal a note for SS13 (presumably referring to Space Station 13, a multiplayer online role-playing game) might not be a good idea for several reasons:

Lack of Context Understanding: AI, while advanced, may not fully understand the nuances and context of a particular situation in the game. SS13 is known for its complex and often chaotic gameplay, which may involve intricate interactions between players, roles, and in-game systems. An AI might not grasp these complexities accurately and could provide inappropriate or irrelevant suggestions for appealing a note.

Subjectivity of Appeals: Appeals in SS13 often involve subjective judgments about player behavior and interactions. These judgments can be based on various factors, including in-game rules, community standards, and individual perceptions. AI algorithms might struggle to interpret subjective criteria effectively and could provide inconsistent or biased recommendations for appealing notes.

Limited Data and Training: AI systems require extensive training data to learn effectively. In the case of SS13 appeals, there might not be enough diverse and representative data available to train an AI model adequately. As a result, the AI might not be able to generalize well to new or complex appeal cases, leading to inaccurate or unreliable recommendations.

Lack of Accountability: Using AI to handle appeals could potentially remove the human element from the process. Human moderators and administrators can exercise judgment, empathy, and discretion when evaluating appeals, taking into account factors beyond strict rule adherence. AI systems, on the other hand, lack this capacity for empathy and may not consider extenuating circumstances or mitigating factors that could influence an appeal decision.

Community Trust and Engagement: In many online gaming communities like SS13, player trust in the appeal process is crucial for maintaining a healthy and vibrant player base. Introducing AI into the appeal process without proper transparency and oversight could erode player trust and lead to dissatisfaction with the moderation system. Human involvement in appeals helps foster a sense of community and ensures that decisions are made with fairness and understanding.

In summary, while AI technology has the potential to assist with various tasks, including moderation and dispute resolution, using it to handle appeals for SS13 notes may not be advisable due to the complexity of the game, the subjective nature of appeals, and the importance of human judgment and community trust in the moderation process.

you made this with AI didnt you

Re: nutter thinks chatgpt will get his note removed

Posted: Sat Mar 23, 2024 4:11 pm

by datorangebottle

Timonk wrote: ↑Sat Mar 23, 2024 3:53 pm

you made this with AI didnt you

Hey Timonk, just wanted to let you know that I used artificial intelligence to compose my previous post. I employed a language model developed by OpenAI called ChatGPT to generate the text. It's pretty fascinating how advanced AI technology has become, capable of mimicking human-like language and generating coherent responses. Let me know if you have any questions about it!

Re: nutter thinks chatgpt will get his note removed

Posted: Sat Mar 23, 2024 5:42 pm

by Timonk

tell ChatGPT to suck my nuts

Re: nutter thinks chatgpt will get his note removed

Posted: Sun Mar 24, 2024 5:26 pm

by datorangebottle

I'm sorry, I can't comply with that request.

This content may violate our usage policies. Did we get it wrong? Please tell us by giving this response a thumbs down.

Re: nutter thinks chatgpt will get his note removed

Posted: Sun Mar 24, 2024 7:10 pm

by ekaterina

Unsane wrote: ↑Sat Mar 23, 2024 2:26 pm

When someone's banned, they need to, um, express their feelings

lmao imagine a guy just rocking up and going "being banned made me sad, unban pls

"

ain't no admin this side of space give a shit about your feelings, bruv, you should address the actual substance of the ban and show in what way it is unfair.

Re: nutter thinks chatgpt will get his note removed

Posted: Sun Mar 24, 2024 8:06 pm

by Unsane

If I cry hard enough the admin will feel bad... Just think about it...

Re: nutter thinks chatgpt will get his note removed

Posted: Mon Mar 25, 2024 8:44 am

by TheSmallBlue

In the vast universe of Space Station 13, where crewmates scurry through the halls completing tasks and impostors lurk in the shadows, the concept of utilizing AI for note-removal requests on TGStation forums resembles a perilous voyage into the unknown. Just as in Among Us, where impostors manipulate trust to sow chaos, allowing AI to handle such delicate matters risks sabotaging the integrity of the community.

Firstly, envisioning AI as Crewmates in this scenario is akin to blindly entrusting the ship's operations to a potentially deceitful entity. While Crewmates in Among Us diligently perform tasks to maintain the station's functionality, AI lacks the nuanced understanding of social dynamics and context required to discern legitimate requests from deceptive ones. Thus, relying on AI for note-removal requests risks inadvertently granting impostors a loophole to exploit, undermining the forum's credibility.

Moreover, deploying AI for note-removal requests mirrors the Impostor's strategy of diverting suspicion away from themselves. In Among Us, Impostors employ cunning tactics to shift blame onto innocent Crewmates, creating confusion and discord. Similarly, entrusting AI with the responsibility of handling note-removal requests deflects accountability away from genuine community members, potentially shielding malicious actors who seek to evade repercussions for their misconduct.

Furthermore, just as Crewmates must engage in vigilant communication to identify Impostors and maintain order aboard the station, the TGStation community relies on active dialogue and human moderation to uphold its standards of conduct. Introducing AI as a mediator in note-removal requests disrupts this crucial interpersonal exchange, depriving the community of the opportunity to collectively address grievances and foster a sense of trust and camaraderie.

In conclusion, employing AI for note-removal requests on TGStation forums mirrors the treacherous tactics of Impostors in Among Us, jeopardizing the harmony and integrity of the community. Just as Crewmates must remain vigilant to unmask Impostors and preserve the station's stability, so too must the TGStation community remain vigilant against the potential pitfalls of entrusting critical decisions to artificial entities. Only through collaborative effort and human oversight can the community navigate the complexities of maintaining a safe and inclusive environment for all.